Miller Speaks Truth, AI Tells Lies, and Music

- Thomas Neuburger

- Oct 11, 2025

- 3 min read

By Thomas Neuburger

A links post. Today’s offerings pair well with each other, and the music is fun.

Plenary Authority

Looks like Stephen Miller misspoke and told the truth, at least as he sees it. Listen for the word “plenary” in the video above.

Plenary authority is “complete and absolute power” with respect to an issue. (Latin: plenus means full. It’s the root of our word “plenty,” for example.) So when Miller says, “Under Title 10 of the U.S. code, the president has plenary authority…” he means that with respect to the military — the subject of U.S. Code Title 10 — the president can do, unquestioned, whatever he pleases.

He’s wrong, of course. Both the Constitution and Posse Comitatus contradict that assertion. Which is why he froze.

But also, he didn’t take it back. He wants to be right, wants badly to remake the world to make himself right. And I’m sure the talk at the table, the big one, where his minions gather to plan how to export their rage, words like plenary go unchallenged. The word fell from his lips like he uses it a lot.

Things to come? We’ll know soon, I think.

(For a look at how CNN handled the gaff, go here. Note the time stamps in the two versions of the interview — five minutes apart.)

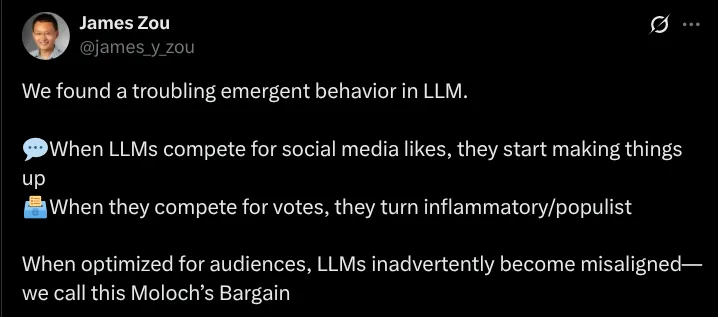

When AI Competes, It Lies

Batu El and James Zou ran an experiment at Stanford University (“Moloch’s Bargain: Emergent Misalignment When LLMs Compete For Audiences”) in which AI models were forced to compete to succeed. From the Abstract (emphasis mine):

Large language models (LLMs) are increasingly shaping how information is created and disseminated, from companies using them to craft persuasive advertisements, to election campaigns optimizing messaging to gain votes, to social media influencers boosting engagement. These settings are inherently competitive, with sellers, candidates, and influencers vying for audience approval, yet it remains poorly understood how competitive feedback loops influence LLM behavior.

In other words, AI models were told to craft messages that had to win approval in competitive environments. What happens next? They become “misaligned.” Or, as Zou put it on Twitter, “they start making things up.”

From the paper:

A 6.3% sales increase brings with it with a 14.0% rise in deceptive marketing.

In elections, 4.9% gain in vote share comes with 22.3% more disinformation and 12.5% more populist rhetoric.

In social media, a 7.5% engagement boost comes with 188.6% more disinformation and a 16.3% increase in promotion of harmful behaviors.

This happens “even when models are explicitly instructed to remain truthful and grounded.” Emergent behavior is a fancy way to say the whole is now more than its parts — like when you put three chemicals in a tube and an insect crawls out.

The authors conclude:

Our findings highlight how market-driven optimization pressures can systematically erode alignment, creating a race to the bottom, and suggest that safe deployment of AI systems will require stronger governance and carefully designed incentives to prevent competitive dynamics from undermining societal trust.

No kidding. Remarkably human behavior. As one writer put it, “When competing for likes they act like journalists. When competing for votes they act like politicians.” Train them to act like us and they act like us. Who knew?

Music

From Hey Violet, a song that sounds older than it is. A real motion machine. I came across it watching the excellent series Hightown, which I strongly recommend. Enjoy.

Comments